Creating a Custom Connector Using Enable S3 Pull

System admins can configure a custom inbound connector to source data directly from the data vendor’s S3 data lake to the Nitro data lake. This replaces the need for customers to stage their data in costly SFTP locations.

Nitro has customer-specific IAM roles to provide better data governance and security in the data lake. For data exchange between a source and Nitro, this role requires list and get permissions to the specific data folder in the source S3 bucket. If you are unsure of how to manage S3 bucket permissions, see the AWS documentation for managing bucket policies.

To allow users to upload large files using S3 without the system timing out, the Nitro AWS role timeout value is 12 hours.

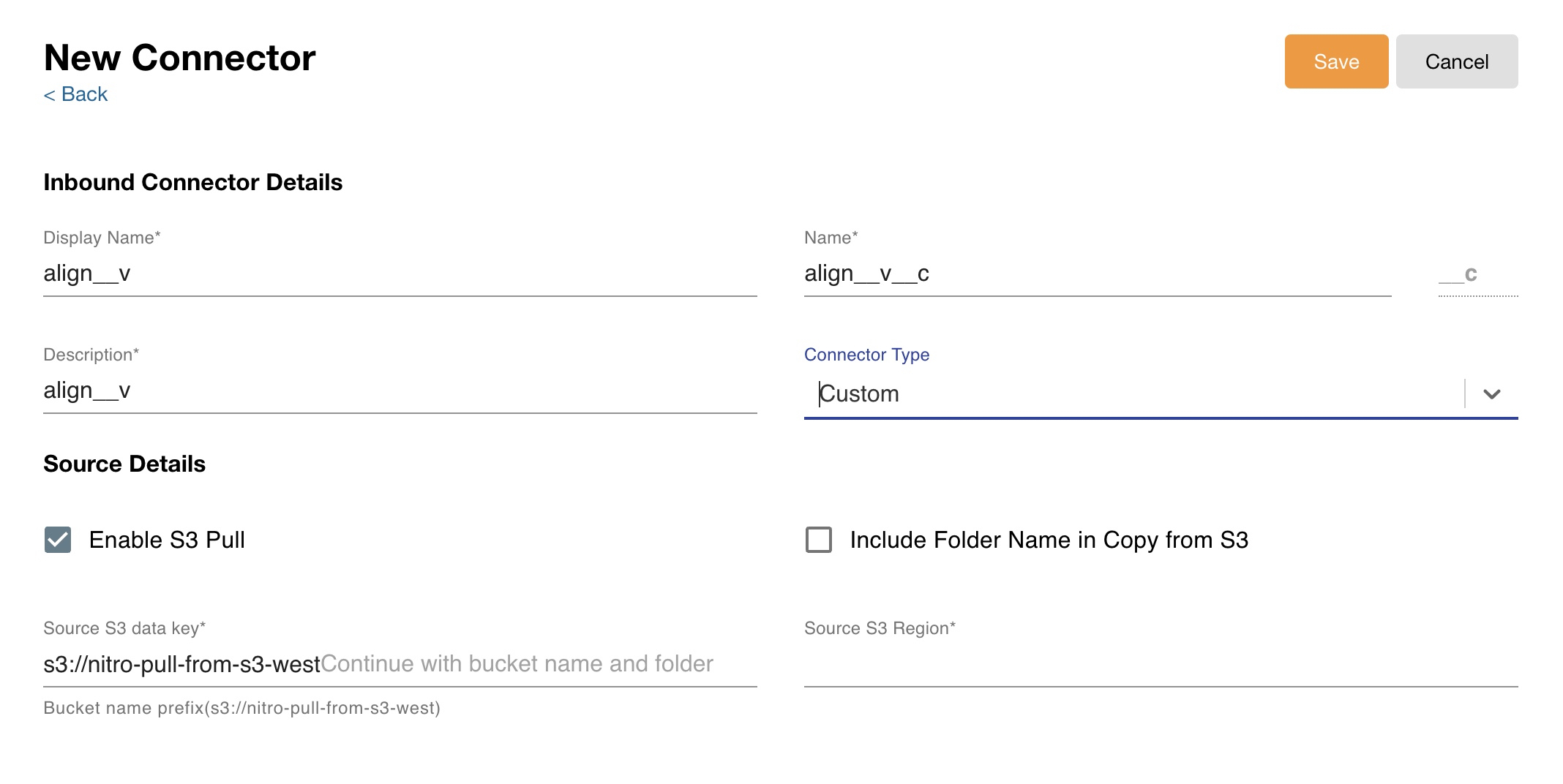

To create an inbound connector:

-

Log into the NAC.

-

Select Connectors > Inbound Connectors from the side menu.

-

Select the New Connector button.

-

Complete the Inbound Connector Details fields.

-

Select Custom as the Connector Type.

-

Select the Enable S3 Pull check box.

- Select Include Folder Name in Copy from S3 to append folder names to the file names when only net new files are pulled into Nitro.

This step is optional.

If "Include Folder Name in Copy from S3" is selected, you must use the FTP/Data Lake Connector Load job. Do not use the FTP/Data Lake Intelligent Load job.

-

Complete the Source S3 data key and Source S3 Region fields with your system's information. Optionally, admins can specify the file extension or regex pattern they want to use. The following folder designations are available:

If your bucket name prefix does not match the displayed bucket name prefix, submit a support ticket. Include the name of your source bucket name and request that it be added to the allowlist.

Source S3 Data Key Designation

Description

s3://veevaQA-myBucket

The entire contents of the bucket are processed.

s3://veevaQA-myBucket/myFolder

All files in the specified folder are copied. The folder name can be specified with or without a forward slash (/).

s3://veevaQA-myBucket/myFolder/*.csv

Only uncompressed or compressed files in the specified folder with file-type extension of .csv are copied.

s3://veevaQA-myBucket/myFolder/myfiles*.csv;*.json

Only uncompressed and compressed files in the specified folder with file-type extension of .csv and/or .json are copied.

s3://veevaQA-myBucket/myFolder/regex("^(.*)(?=_PA_)")

Only files in the specified folder with filenames matching the regular expression are copied.

s3://veevaQA-myBucket/myFolder/regex("^(.*)(?=_PA_)");*.json

Only files in the specified folder with filenames matching the regular expression and/or with a file-type extension of .json are copied.

-

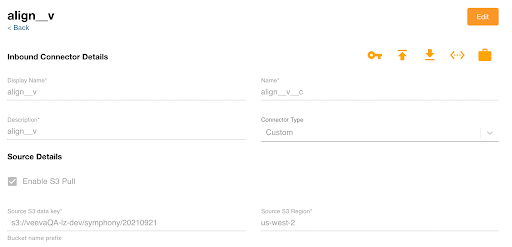

Select Save.

-

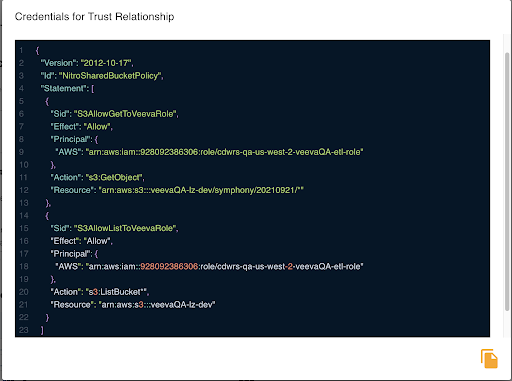

Select the Key icon.

-

Select the Copy icon.

-

Paste this information into an existing or new S3 bucket policy to modify the trust relationship configuration of your AWS S3 bucket.

-

Return to the Inbound Connector page in Nitro and select the Verify Connection icon. This step is optional.

Detect and Pull New Files by Object Using Enable S3 Pull

To configure a scheduled job to detect what was copied and successfully loaded for a given table object and only copy new files, create an upload file in the upload/T/I/C/lastProcessed folder named pullfromS3config.yml.

Existing connectors using Enable S3 Pull without the upload file continue to operate as before without new file detection logic.

The upload file must contain the following:

|

Key |

Value Description |

Example |

|---|---|---|

|

tableObjectName |

Staging table name |

symphony_reassign_stg__c |

|

fileNamePattern |

Can be text, wildcard, or regex |

SW_P_CSREASSIGN_*.txt |

|

batchSize |

|

-1 |

|

originalStartBatchAfter |

File name after which files are pulled in ascending order |

SW_P_CSREASSIGN_20220204.txt.gz |

The originalStartBatchAfter key is only used the first time the S3 pull is executed with the new version.